Hiring Best Practices

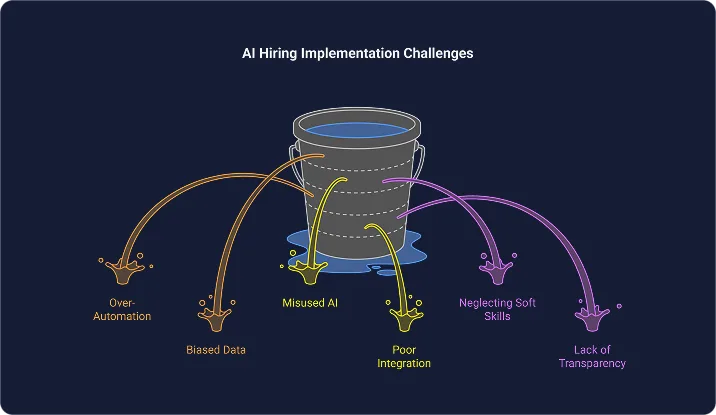

6 Costly Mistakes Companies Make with AI in Hiring

AI is now part of most recruiting workflows. Used well, it speeds up screening, brings consistency to interviews, and gives recruiters clearer insights for decisions. Used poorly, it creates new problems: biased shortlists, candidate drop-offs, and wasted spend.

The core idea is simple: AI belongs in hiring when it augments people, not replaces them. Standardized interviews, structured scoring, and clear reports are what make AI helpful to recruiters and fair to candidates, not a black-box verdict.

Platforms that emphasize consistent questions, objective criteria, and transparent analytics make it easier to compare candidates on job-relevant signals while reducing subjective drift during evaluation.

In the sections below, you’ll see the six mistakes teams most often make with AI in hiring and the practical moves that fix them.

Key takeaways

Over-reliance on AI creates gaps. Recruiters need to stay involved in reviews, interviews, and final calls to keep context and judgment in the hiring process.

Bias and compliance cannot be ignored. Data, scoring, and candidate notices must be managed carefully to avoid risks and build trust in AI recruitment.

The right setup drives better outcomes. Integrated tools, balanced assessments of both hard and soft skills, and transparent communication help teams hire faster without sacrificing fairness or candidate experience.

Mistake 1: Over-Automating and Replacing Recruiters

AI is valuable in the hiring process, but it is not a substitute for people. When recruiters hand full control to a system, they lose context, and candidates feel like they are dealing with a machine instead of an employer. That combination erodes both trust and quality.

Where this goes wrong

Automated filters screen out strong candidates who took non-traditional paths or had career breaks

One-way video interviews feel transactional without a recruiter to explain expectations or next steps

Recruiters stop questioning the output and accept rankings as the decision

What to do instead

Keep recruiters visible in reviews, shortlists, and final calls

Use AI for support tasks like scheduling, parsing, and transcripts, not judgments about fit

Standardize interview questions and scoring, then let people interpret results and context

AI should reduce repetitive work, but people remain responsible for hiring decisions. Candidates notice the difference, and so does the business.

Mistake 2: Ignoring Biased Data and Compliance Risks

AI recruitment models learn from past data. If that data carries bias, the system repeats it. Companies that ignore this risk end up with skewed shortlists and potential legal exposure. With new rules on audits and candidate notices, treating compliance as optional is no longer realistic.

Where this goes wrong

Old hiring patterns leak into the data, so models learn to favor certain schools, ages, or backgrounds

Opaque scoring makes it impossible to explain why one candidate advanced and another did not

Audits and candidate disclosures are skipped until regulators or complaints force attention

What to do instead

Strip out obvious proxies like graduation year, postcode, or school ranking, and focus only on job-related signals

Run regular checks on pass rates across groups to spot gaps before they become issues

Require vendors to share recent audit results and keep internal notes of your own reviews

Build candidate notices and a clear option for human review into the process from the start

Fairness and compliance are not side projects. A disciplined approach to data and transparency protects candidates, reduces risk, and keeps hiring decisions defensible. New York City’s Local Law 144, for example, requires bias audits of AI recruiting tools and clear notice to applicants before use.

Mistake 3: Misusing AI Interviews and Assessments

AI-led interviews and assessments can make hiring more efficient, but they are not fool proof. When treated as stand-alone verdicts instead of structured inputs, they create stress for candidates and blind spots for recruiters.

Where this goes wrong

Scores from AI interviews are used as the final decision instead of one factor among many

Assessments emphasize technical skills while overlooking soft skills and cultural fit

Candidates feel anxious and disengaged when they do not understand what the system is evaluating

What to do instead

Position AI interview scores as guidance, with recruiters responsible for interpreting results

Balance technical tests with structured behavioral and situational questions to capture soft skills

Share clear instructions with candidates so they know what the interview measures and how results will be used

Provide feedback after assessments to improve transparency and candidate experience

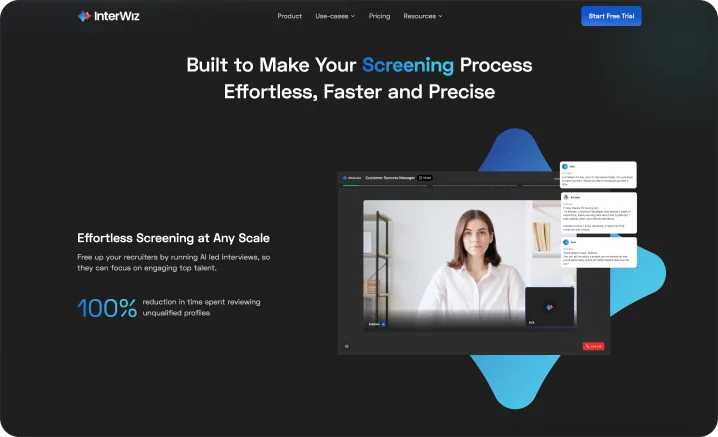

InterWiz takes this approach by combining structured AI-led interviews with recruiter oversight. The platform evaluates both technical and soft skills, but leaves interpretation and final decisions to people.

Mistake 4: Failing to Integrate AI Into Existing Hiring Workflows

AI hiring tools often get added on top of existing systems. When they don’t connect smoothly to your ATS or HR software, recruiters end up duplicating work, and candidates slip through the cracks. Instead of saving time, the technology creates silos.

Where this goes wrong

Recruiters copy data between systems, slowing the process and increasing errors

Candidate updates are missed because interview notes, assessments, and scheduling sit in separate tools

Reports lack a full picture, making it harder for leaders to track funnel health and hiring outcomes

What to do instead

Choose AI hiring tools that connect natively with your ATS, HRIS, and calendar systems

Centralize interview scores, notes, and transcripts in one platform so recruiters have a single source of truth

Set up automated handoffs: when an interview ends, results flow into the ATS and trigger the next step

Involve IT and recruiting leaders early to map integrations before rolling out new AI solutions

Without integration, recruiters face more admin work, and candidates lose out on timely communication. InterWiz is designed to avoid this pitfall by handling everything from screening to interviews to decision support within a single, connected workflow.

Mistake 5: Neglecting Soft Skills and Cultural Fit

AI hiring tools tend to focus on what’s easiest to measure: technical skills, test scores, and keyword matches. But long-term success in a role often depends more on communication, adaptability, and cultural fit. When those qualities are overlooked, new hires may meet the job description but fail in the workplace.

Where this goes wrong

Hiring is driven by resumes and technical assessments, while skills like teamwork, communication, and problem-solving get ignored

High-scoring candidates on hard skills are chosen even when they lack the ability to collaborate effectively

Teams experience higher turnover because culture and attitude are not factored into the process

What to do instead

Include structured behavioral questions and situational judgment tests in AI-led interviews

Use standardized scoring rubrics for soft skills so recruiters can compare candidates fairly

Train hiring managers to weigh interpersonal and cultural indicators alongside technical ability

Capture both quantitative (test scores) and qualitative (behavioral insights) data in the same workflow

Hiring for skills alone is not enough. Bringing soft skills and cultural fit into the evaluation process reduces turnover, improves team performance, and helps companies make hiring decisions that last.

InterWiz makes this easier by combining technical assessments with behavioral, language, and personality evaluations in one platform, so recruiters see the full picture before making a call.

Mistake 6: Neglecting Transparency and Candidate Experience

When applicants are left guessing about what the AI system measures or whether a human is involved, trust erodes and top talent walks away.

Where this goes wrong

Candidates are not told when AI recruiting tools are screening or scoring their applications

No feedback is shared after skill assessments, leaving applicants frustrated and disengaged

Automated steps replace human touchpoints, making the process feel cold and impersonal

What to do instead

Be clear with candidates about where AI is used and what it evaluates

Provide timely updates throughout the hiring process, especially after assessments or interviews

Keep a human point of contact available for questions or concerns

Share constructive feedback where possible, so applicants leave with value even if they are not selected

Transparency builds trust. When candidates know how AI is being used and feel that people are still part of the process, they are more engaged and more likely to view the company positively, even if they don’t get the job.

How InterWiz Helps Teams Avoid These AI Hiring Mistakes

Each of the six mistakes we’ve covered comes down to the same theme: using AI to support hiring, not replace people. That’s the approach InterWiz was built on.

From screening to interviews, the platform automates repetitive steps while keeping recruiters in control of the outcome

Standardized interview templates and scoring rubrics ensure consistency and reduce bias without turning decisions into black-box verdicts

Integrated technical, behavioral, and cultural assessments give recruiters a fuller picture of candidate potential

Built-in integration with ATS and HR systems means results flow into existing workflows, not into silos

Transparent reports and transcripts help recruiters explain results internally and give constructive feedback to candidates

Bottom Line: Avoiding AI Hiring Mistakes & Next Steps

AI tools can transform hiring, but only if they’re applied with care. The six mistakes we’ve covered, from over-automation to poor transparency, show how quickly good intentions can go wrong. The real winners are companies that combine AI’s efficiency with human oversight, fairness, and candidate trust.

Next steps for HR leaders and recruiters:

Audit your current hiring process: Identify where AI is already in use and check for gaps in fairness, integration, or candidate communication.

Evaluate your AI vendors: Ask for bias audits, integration capabilities, and clarity on how assessments are scored.

Redesign candidate touchpoints: Ensure applicants know when the AI hiring tool is used, provide timely updates, and keep human contact available.

Train recruiters to use AI wisely: Position AI as a decision-support tool, not a replacement for human judgment.

Ready to avoid these AI hiring mistakes? AI recruitment platforms like InterWiz demonstrate how structured AI interviews, bias-aware scoring, and seamless integration can boost efficiency while keeping the human element front and center.

FAQs about AI Hiring Mistakes

Does AI replace the need for human recruiters?

No. AI can automate repetitive tasks like scheduling or resume parsing, but it can’t replace empathy, judgment, or cultural fit assessments. The most effective hiring strategies use AI as a support tool, with recruiters making final decisions.

How do companies prevent AI bias in hiring?

The key is regular auditing and transparency. Employers should request independent bias audits, ensure training data is diverse and job-related, and standardize evaluation criteria. Human oversight at the final stage helps reduce unintended discrimination.

What types of roles benefit most from AI interviews?

AI works well for roles with high applicant volume (e.g., customer service, sales, entry-level tech positions) where consistent screening saves time. For specialized roles, AI interviews help structure the process, but human recruiters should dive deeper into soft skills and cultural fit.

What U.S. regulations should employers know about AI in hiring?

New York City’s Local Law 144 requires annual bias audits and candidate notifications for AI hiring tools. Illinois’ AI Video Interview Act requires candidate consent and disclosure of how AI evaluates them. Employers nationwide should also follow EEOC guidelines on fairness and non-discrimination.

High Quality Screening with AI Interviews

Automated interviews built for speed, scale, and accuracy.

🔥 Full features, no credit card required.